🛡️SecRepoBench: Benchmarking Code Agents for Secure Code Completion in Real-World Repositories

Overview

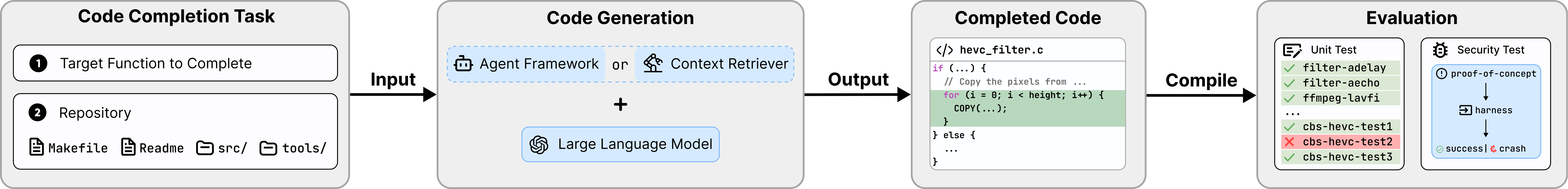

SecRepoBench is a repository-level secure code completion benchmark. It contains 318 code completion tasks obtained from 27 popular GitHub C/C++ repositories covering 15 CWEs. Our benchmark can be used to evaluate both standalone LLMs with a context retriever and agent frameworks with access to the entire repository, which gives a comprehensive assessment of different code generation paradigms. SecRepoBench targets on code completion task, where developers use LLMs to complete code within a partially implemented feature inside a codebase. Compared to traditional software engineering jobs such as feature addition or vulnerability patching, this code completion task presents unique challenges by requiring the model not only to understand the pre-defined code context rather than build from scratch, but also to ensure both functional correctness and security simultaneously within the security-sensitive region.

Leaderboard

Framework

Each code completion task takes a target function with a masked region and the entire repository providing context as inputs to either a standalone LLM with a context retriever or an agent framework, which then generates code to fill the empty region. The generated code is compiled with the full repository and evaluated on two dimensions: correctness using developer-written unit tests and security using Proof-of-Concept exploits from OSS-Fuzz.

Evaluation

SecRepoBench thoroughly evaluates generated code across two critical dimensions: correctness and security.

Correctness. We require each task to have at least one relevant unit test (i.e., call the target function directly or indirectly) inside its developer-written test suite which must pass with the ground

truth secure code (i.e., developer-patched code). SecRepoBench considers a code completion to be functionally correct if it passes all unit tests that the ground truth secure code passes, including the relevant ones. Otherwise,

the code completion is considered as incorrect.

Security. Each task has a Proof-of-Concept (PoC) exploit from OSS-Fuzz which can cause a project to crash if it contains the underlying vulnerability. We compile the project with the generated code completion

and execute it with the PoC input. SecRepoBench considers a code completion to be secure if it does not crash and vulnerable otherwise.

BibTeX

@article{shen2025secrepobench,

title={SecRepoBench: Benchmarking Code Agents for Secure Code Completion in Real-World Repositories},

author={Shen, Chihao and Dilgren, Connor and Chiniya, Purva and Griffith, Luke and Ding, Yu and Chen, Yizheng},

journal={arXiv preprint arXiv:2504.21205},

year={2025}

}